So I’ve read the Claude, Meta and some of the other models prompting guides but I think the YCombinator video they just released explains them well. If you are creating an AI prompt for something even a little complex then you should ask it first to provide a better prompt. This is called Meta Prompting.… Continue reading Better AI Prompting quick tips

Category: Michael Kublers

The 20 Lessons on Tyranny

The 20 Lessons on Tyranny 1. Do not obey in advance – Don’t voluntarily surrender power by anticipating what authoritarian leaders might want. 2. Defend institutions – Protect democratic institutions like courts, newspapers, laws, and labor unions through active participation. 3. Beware the one-party state – Support multi-party systems and democratic elections; consider participating in… Continue reading The 20 Lessons on Tyranny

Claude – Some complaints and suggestions

I’ve been using and paying for Claude the AI by Anthropic for a while now and there’s some things that bug me about it. Firstly, I’ll mention that I love Claude. The output is detailed and useful. I use Claude for thinking through hard problems. Sometimes directly, sometimes in Perplexity or Cursor. I OFTEN use… Continue reading Claude – Some complaints and suggestions

The Dictator’s Handbook – Summary

The Dictator’s Handbook and The Rules for Rulers: A Summary Both “The Dictator’s Handbook” by Bruce Bueno de Mesquita and Alastair Smith and CGP Grey’s video “The Rules for Rulers” (based on the book) present a powerful framework for understanding political power called selectorate theory. This summary is provided by Claude. Core Principles At the… Continue reading The Dictator’s Handbook – Summary

Key Concepts from Yuval Noah Harari’s “Nexus”

Summary of “Nexus” by Yuval Noah Harari This is a summary generated by Google’s Gemini 2.0 Flash Thinking model. It was given an 833KB transcript based on a Whisper AI Speech to Text of the Audiobook of Nexus.I couldn’t use Claude, my usual go-to because there was so much content it was beyond the acceptable… Continue reading Key Concepts from Yuval Noah Harari’s “Nexus”

Summary of Flow by Mihaly Csikszentmihalyi

The Path to Flow: Key Insights from Mihaly Csikszentmihalyi’s Work on Optimal Experience This post is a Claude generated summary of the book Flow: The Psychology of Optimal Experience by Mihaly Csikszentmihalyi Have you ever been so completely absorbed in an activity that you lost track of time, forgot your worries, and felt a deep… Continue reading Summary of Flow by Mihaly Csikszentmihalyi

The Byzantine World of SM Tickets: A Tale of Bureaucracy Gone Wild – Dua Lipa edition

What should have been a simple ticket collection turned into a bureaucratic nightmare at SM Tickets. After an initial failed attempt at SM City Masinag’s service counter, the next day devolved into a labyrinth of redirections, digital scavenger hunts, and byzantine requirements. The final straw? A live video call with the ticket holder wasn’t sufficient authorization – they demanded a physical signed letter, proving that in the age of digital convenience, SM Tickets remains steadfastly committed to making simple tasks unnecessarily complex.

Agency, Initiative and AI’s

I asked Claude about the term Agency and what it thinks is needed for an AI system.I though the response was a good answer to the argument about consciousness and AI systems. Basically the LLM’s, even with reasoning, don’t have agency as we’d think of it and that might be something we consider important for… Continue reading Agency, Initiative and AI’s

Summary of The Unaccountability Machine

The key ideas of “The Unaccountability Machine” by Dan Davies. This book explores how modern systems and organizations have developed in ways that make it increasingly difficult to identify who is responsible when things go wrong.

The central concept Davies introduces is the “accountability sink” – systems where decisions are delegated to complex rulebooks or procedures, making it impossible to trace the source of mistakes. When something goes wrong, there’s often no clear person or entity that can be held responsible.

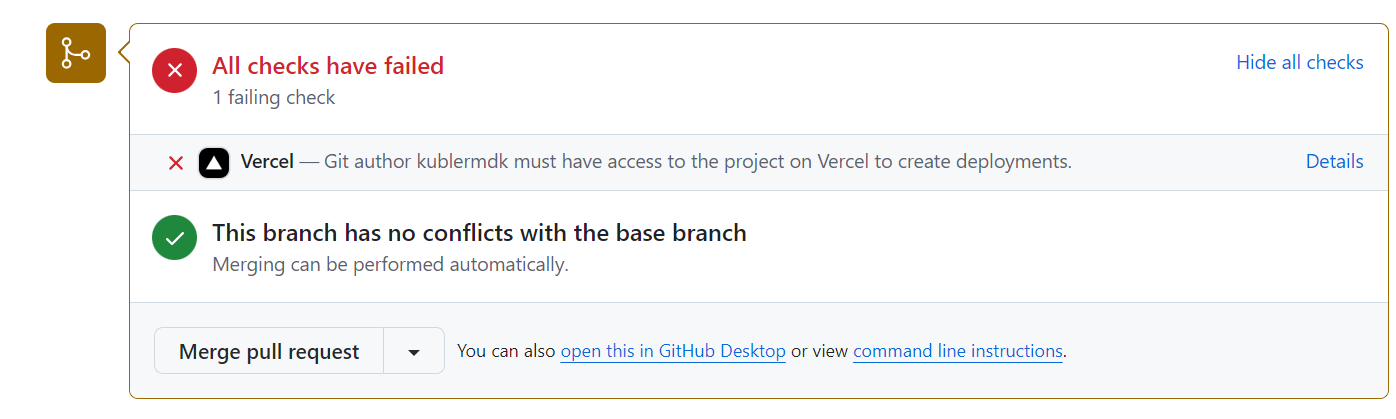

Vercel’s Stealth Move: How they Silently broke the Hobby plan Deploys

In the fast-paced world of web development, unexpected changes to our tools can bring projects to a grinding halt. This is exactly what happened when Vercel, a popular hosting platform for static and serverless deployment, quietly altered their system in a way that broke our established deployment process. As a small business relying on Vercel’s hobby plan, we found ourselves facing three days of failed builds with no warning or explanation. This article delves into our frustrating experience, the lack of communication from Vercel, and the broader implications of such unannounced changes for developers and businesses. It serves as a cautionary tale about the hidden costs of ‘free’ services and the importance of transparent communication in the tech industry.